Testing¶

Workflows¶

- Master - Runs tests on Python 3 for every target on merges to the

masterbranch - PR - Runs tests on Python 2 & 3 for any modified target in a pull request as long as the base or developer packages were not modified

- PR All - Runs tests on Python 2 & 3 for every target in a pull request if the base or developer packages were modified

- Nightly minimum base package test - Runs tests for every target once nightly using the minimum declared required version of the base package

- Nightly Python 2 tests - Runs tests on Python 2 for every target once nightly

- Test Agent release - Runs tests for every target when manually scheduled using specific versions of the Agent for E2E tests

Reusable workflows¶

These can be used by other repositories.

PR test¶

This workflow is meant to be used on pull requests.

First it computes the job matrix based on what was changed. Since this is time sensitive, rather than fetching the entire history we use GitHub's API to find out the precise depth to fetch in order to reach the merge base. Then it runs the test workflow for every job in the matrix.

Note

Changes that match any of the following patterns inside a directory will trigger the testing of that target:

assets/configuration/**/*tests/**/**.pyhatch.tomlmetadata.csvpyproject.toml

Warning

A matrix is limited to 256 jobs. Rather than allowing a workflow error, the matrix generator will enforce the cap and emit a warning.

Test target¶

This workflow runs a single job that is the foundation of how all tests are executed. Depending on the input parameters, the order of operations is as follows:

- Checkout code (on pull requests this is a merge commit)

- Set up Python 2.7

- Set up the Python version the Agent currently ships

- Restore dependencies from the cache

- Install & configure ddev

- Run any setup scripts the target requires

- Start an HTTP server to capture traces

- Run unit & integration tests

- Run E2E tests

- Run benchmarks

- Upload captured traces

- Upload collected test results

- Submit coverage statistics to Codecov

Target setup¶

Some targets require additional set up such as the installation of system dependencies. Therefore, all such logic is put into scripts that live under /.ddev/ci/scripts.

As targets may need different set up on different platforms, all scripts live under a directory named after the platform ID. All scripts in the directory are executed in lexicographical order. Files in the scripts directory whose names begin with an underscore are not executed.

The step that executes these scripts is the only step that has access to secrets.

Secrets¶

Since environment variables defined in a workflow do not propagate to reusable workflows, secrets must be passed as a JSON string representing a map.

Both the PR test and Test target reusable workflows for testing accept a setup-env-vars input parameter that defines the environment variables for the setup step. For example:

jobs:

test:

uses: DataDog/integrations-core/.github/workflows/pr-test.yml@master

with:

repo: "<NAME>"

setup-env-vars: >-

${{ format(

'{{

"PYTHONUNBUFFERED": "1",

"SECRET_FOO": "{0}",

"SECRET_BAR": "{1}"

}}',

secrets.SECRET_FOO,

secrets.SECRET_BAR

)}}

Note

Secrets for integrations-core itself are defined as the default value in the base workflow.

Environment variable persistence¶

If environment variables need to be available for testing, you can add a script that writes to the file defined by the GITHUB_ENV environment variable:

#!/bin/bash

set -euo pipefail

set +x

echo "LICENSE_KEY=$LICENSE_KEY" >> "$GITHUB_ENV"

set -x

Target configuration¶

Configuration for targets lives under the overrides.ci key inside a /.ddev/config.toml file.

Note

Targets are referenced by the name of their directory.

Platforms¶

| Name | ID | Default runner |

|---|---|---|

| Linux | linux | Ubuntu 22.04 |

| Windows | windows | Windows Server 2022 |

| macOS | macos | macOS 13 |

If an integration's manifest.json indicates that the only supported platform is Windows then that will be used to run tests, otherwise they will run on Linux.

To override the platform(s) used, one can set the overrides.ci.<TARGET>.platforms array. For example:

[overrides.ci.sqlserver]

platforms = ["windows", "linux"]

Runners¶

To override the runners for each platform, one can set the overrides.ci.<TARGET>.runners mapping of platform IDs to runner labels. For example:

[overrides.ci.sqlserver]

runners = { windows = ["windows-2025"] }

Exclusion¶

To disable testing, one can enable the overrides.ci.<TARGET>.exclude option. For example:

[overrides.ci.hyperv]

exclude = true

Target enumeration¶

The list of all jobs is generated as the /.github/workflows/test-all.yml file.

This reusable workflow is called by workflows that need to test everything.

Tracing¶

During testing we use ddtrace to submit APM data to the Datadog Agent. To avoid every job pulling the Agent, these HTTP trace requests are captured and saved to a newline-delimited JSON file.

A workflow then runs after all jobs are finished and replays the requests to the Agent. At the end the artifact is deleted to avoid needless storage persistence and also so if individual jobs are rerun that only the new traces will be submitted.

We maintain a public dashboard for monitoring our CI.

Test results¶

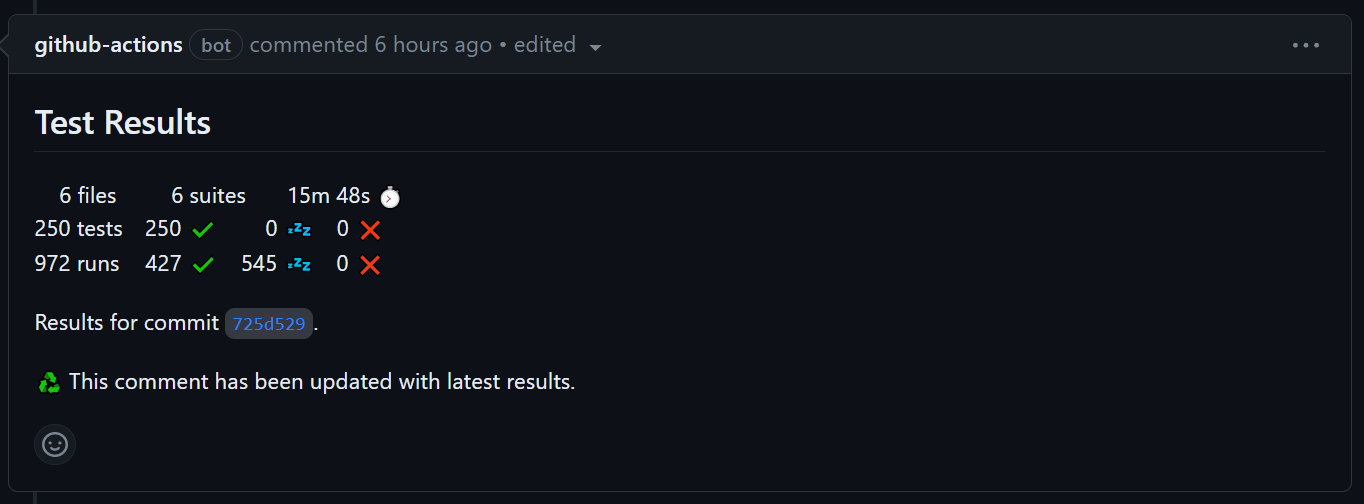

After all test jobs in a workflow complete we publish the results.

On pull requests we create a single comment that remains updated:

On merges to the master branch we generate a badge with stats about all tests:

Caching¶

A workflow runs on merges to the master branch that, if the files defining the dependencies have not changed, saves the dependencies shared by all targets for the current Python version for each platform.

During testing the cache is restored, with a fallback to an older compatible version of the cache.

Python version¶

Tests by default use the Python version the Agent currently ships. This value must be changed in the following locations:

PYTHON_VERSIONenvironment variable in /.github/workflows/cache-shared-deps.ymlPYTHON_VERSIONenvironment variable in /.github/workflows/run-validations.ymlPYTHON_VERSIONenvironment variable fallback in /.github/workflows/test-target.yml

Caveats¶

Windows performance¶

The first command invocation is extraordinarily slow (see actions/runner-images#6561). Bash appears to be the least affected so we set that as the default shell for all workflows that run commands.

Note

The official checkout action is affected by a similar issue (see actions/checkout#1246) that has been narrowed down to disk I/O.